B.E. Eighth Semester (Computer Science & Engineering) (C.G.S.) Winter 2023

10352 : Artificial Intelligence - 8 KS 01

QUESTION AND SHORT ANSWERS

- What are the four questions to be considered to define AI problems? Describe the state space for the "Water Jug problem".

- Describe problem characteristics in order to choose the most appropriate method. Explain with an example (any one).

- What are the applications of AI? Explain how AI technique exploits knowledge.

- Explain control strategy with an example of Tic-Tac-Toe.

- What are the different general-purpose strategies or weak methods? Explain any one with its importance and application.

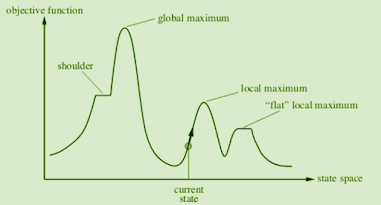

- What is the hill climbing procedure? Explain strategies to overcome its lacunas.

- What do you mean by heuristic? Define any two heuristic functions.

- What do you mean by problem reduction? Explain the procedure with an example.

- What is game playing? Explain the reasons that games appear to be a good domain in machine intelligence.

- Show and explain alpha-beta cut-off.

- Explain resolution in predicate logic by locating pairs of literals that cancel out.

- What do you mean by unification? Thus explain the unification algorithm.

- Convert the following sentences into WFF and then write the procedure for conversion to clause form.

- Explain the two sentences that are logically equivalent and describe the law of equivalence.

- What are the methods to illustrate the structural representation of knowledge?

- Explain the process of finding the right structure of knowledge representation.

- How are knowledge representation illustrated in semantic nets in AI?

- What do you mean by understanding? What makes understanding hard in AI?

- Explain the structure of scripts and schemas in understanding.

- How do you understand the single and multiple sentences in natural language understanding?

- Explain the role of keyword matching in understanding. Also, explain semantic analysis.

1)What are the applications of AI? Explain AI technique exploits knowledge.

What are the applications of AI?

- Natural Language Processing (NLP): AI-powered NLP technologies enable machines to understand, interpret, and generate human language. Applications include virtual assistants (e.g., Siri, Alexa), language translation, sentiment analysis, and chatbots.

- Computer Vision: AI algorithms analyze and interpret visual data from images or videos, enabling applications such as facial recognition, object detection, autonomous vehicles, medical image analysis, and surveillance systems.

- Machine Learning: ML techniques enable systems to learn from data and make predictions or decisions without explicit programming. Applications include recommendation systems, fraud detection, predictive maintenance, personalized marketing, and healthcare diagnostics.

- Robotics: AI-driven robots perform tasks autonomously or semi-autonomously in various environments. Applications range from industrial automation and manufacturing to healthcare assistance, home automation, and exploration of hazardous environments.

- AI in Healthcare: AI technologies assist healthcare professionals in diagnosis, treatment planning, drug discovery, personalized medicine, medical imaging analysis, remote patient monitoring, and health informatics.

- Autonomous Systems: AI powers autonomous vehicles, drones, and unmanned aerial vehicles (UAVs), enabling capabilities such as self-driving cars, automated delivery services, precision agriculture, and search and rescue missions.

Explain how AI technique exploits knowledge.

- Knowledge Representation: AI systems represent knowledge using formal languages, ontologies, semantic networks, or other symbolic structures that capture concepts, relationships, and constraints within a domain. By encoding knowledge in a machine-readable format, AI systems can understand and manipulate information to perform tasks.

- Inference and Reasoning: AI systems use rules, logic, or probabilistic models to derive new knowledge or make decisions based on existing knowledge. Inference mechanisms allow AI systems to draw conclusions, make predictions, or solve problems by applying logical or statistical reasoning processes.

- Machine Learning: ML techniques leverage knowledge from data to learn patterns, correlations, and relationships that enable predictive modeling, classification, clustering, and optimization. By training on large datasets, ML algorithms extract valuable insights and generalize knowledge to make predictions on new data.

- Expert Systems: Expert systems capture domain-specific knowledge from human experts and encode it into a knowledge base, which is then used to provide expert-level advice, recommendations, or problem-solving capabilities. Expert systems rely on rules, heuristics, or case-based reasoning to emulate human expertise in specific domains.

- Natural Language Processing (NLP): NLP techniques extract knowledge from textual data, enabling machines to understand, interpret, and generate human language. NLP algorithms analyze linguistic structures, semantics, and context to extract information, perform sentiment analysis, answer questions, or generate human-like text.

2) What are the four questions to be consider to define AI problems? Describe the state space for “Water jug problem”.

What are the four questions to consider to define AI problems?

- What is the initial state?

- What are the possible actions?

- What is the transition model?

- What is the goal test?

Describe the state space for the "Water Jug problem".

- Let's denote the state of the water jugs as (x, y), where x represents the amount of water in the first jug and y represents the amount of water in the second jug.

- The initial state is typically represented as (0, 0), indicating that both jugs are initially empty.

- The possible actions include:

- Fill the first jug (if not already full).

- Fill the second jug (if not already full).

- Empty the first jug (if not already empty).

- Empty the second jug (if not already empty).

- Pour water from the first jug into the second jug until the second jug is full (if the first jug has water).

- Pour water from the second jug into the first jug until the first jug is full (if the second jug has water).

- The transition model determines the resulting state after each action is taken, considering the capacities of the jugs and the amount of water poured.

- The goal test checks whether the desired amount of water is present in either jug, indicating a successful solution to the problem.

3) Describe problem characteristics in order to choose the most appropriate method. Explain with an example (any one).

- Search Space Complexity: Since the number of possible routes in a city can be enormous, problems with large search spaces may benefit from heuristic search algorithms like A* (A-star). A* efficiently navigates through the search space by prioritizing paths likely to lead to the goal based on heuristic evaluation functions.

- Optimality: If finding the optimal solution (shortest path) is crucial, informed search algorithms like Dijkstra's algorithm or variants of A* can guarantee optimality under certain conditions. These methods explore the search space systematically to ensure that the best solution is found.

- Domain-Specific Knowledge: In route planning, domain-specific knowledge such as traffic conditions, road closures, and historical route data can significantly improve the efficiency and accuracy of the solution. Techniques like expert systems or knowledge-based systems can incorporate such knowledge to make informed decisions during pathfinding.

- Dynamic Environments: If the city's road network undergoes frequent changes due to construction or traffic updates, adaptive or online learning approaches like reinforcement learning may be suitable. Reinforcement learning agents can continuously interact with the environment, learning optimal policies through trial and error.

4)Explain control strategy with an example Tic- Tac- Toe.

- Maximizing Player ('X'): The maximizing player ('X') aims to maximize its chances of winning. It evaluates each possible move by recursively exploring the game tree to determine the highest possible utility (winning or drawing).

- Minimizing Player ('O'): The minimizing player ('O') represents the opponent and seeks to minimize the maximizing player's chances of winning. It evaluates each possible countermove by recursively exploring the game tree to determine the lowest possible utility (losing or drawing).

- Utility Function: The utility function assigns scores to terminal states based on the outcome (win, lose, or draw). In Tic-Tac-Toe, winning states are assigned a high score (e.g., +1), losing states are assigned a low score (e.g., -1), and draw states are assigned a neutral score (e.g., 0).

- Backpropagation: After evaluating all possible moves and their outcomes, the algorithm backpropagates the utility values to determine the optimal move at the current state. The maximizing player chooses the move with the highest utility value, while the minimizing player chooses the move with the lowest utility value.

5)What are the different general purpose strategies or weak methods? Explain any one with its importance and application.

- Simplicity: Hill Climbing is easy to understand and implement, making it accessible even to those with limited computational resources or expertise in optimization techniques.

- Efficiency: The algorithm typically requires minimal computational overhead and can quickly converge to a local optimum, making it suitable for problems with large search spaces or real-time constraints.

- Flexibility: Hill Climbing can be adapted to various problem domains and objective functions by customizing the evaluation criteria and neighborhood structure. It is widely used in fields such as engineering design, machine learning, scheduling, and resource allocation.

- Local Search: While Hill Climbing focuses on local search and may get stuck in suboptimal solutions (local optima), it serves as a foundational technique for more sophisticated optimization algorithms that explore the search space more extensively.

- Heuristic Search: Hill Climbing can incorporate heuristic information or domain-specific knowledge to guide the search process, enhancing its effectiveness in finding high-quality solutions within a reasonable time frame.

6)What is hill climbing procedure? Explain strategies to overcome its lacunas.

- Initialization: Start with an initial solution or state.

- Evaluation: Evaluate the current solution using an objective function or evaluation criteria.

- Neighbor Generation: Generate neighboring solutions by making small changes or perturbations to the current solution. These changes define the search space exploration strategy.

- Selection: Choose the neighboring solution that maximizes or improves the objective function value the most (i.e., the uphill direction).

- Termination: Repeat steps 2-4 until a termination condition is met (e.g., no further improvement is possible, a maximum number of iterations is reached).

- Random Restart: Address the issue of getting stuck in local optima by performing multiple independent Hill Climbing runs from different initial solutions. By randomly restarting the optimization process, the algorithm explores different regions of the search space and increases the likelihood of finding a global optimum.

- Simulated Annealing: Extend Hill Climbing with probabilistic acceptance of downhill moves to escape local optima. Simulated Annealing gradually decreases the probability of accepting worse solutions as the optimization progresses, allowing the algorithm to explore the search space more extensively while still converging to a global optimum.

- Tabu Search: Prevent cycling and repetitive behavior by maintaining a memory of recently visited solutions or moves (tabu list) and avoiding revisiting them in subsequent iterations. Tabu Search introduces diversification and intensification strategies to balance exploration and exploitation of the search space effectively.

- Genetic Algorithms: Apply evolutionary principles such as selection, crossover, and mutation to guide the search process in a more global and population-based manner. Genetic Algorithms maintain a population of candidate solutions and iteratively evolve them through successive generations, enabling efficient exploration of the search space and robust convergence to high-quality solutions.

7)What do you mean by heuristic? Define any two heuristic function.

- Manhattan Distance: The Manhattan Distance heuristic is commonly used in pathfinding algorithms such as A* (A-star) for navigating grid-based environments. It measures the distance between two points on a grid by summing the absolute differences in their horizontal and vertical positions. The Manhattan Distance is calculated as follows:\( \text{Manhattan Distance} = |x_1 - x_2| + |y_1 - y_2| \)where \( (x_1, y_1) \) and \( (x_2, y_2) \) are the coordinates of two points on the grid. This heuristic provides a lower bound on the actual distance between two points and is admissible, meaning it never overestimates the cost to reach the goal.

- Euclidean Distance: The Euclidean Distance heuristic calculates the straight-line distance between two points in Euclidean space. It is commonly used in optimization and clustering algorithms to estimate proximity or similarity between data points. The Euclidean Distance between two points \( (x_1, y_1) \) and \( (x_2, y_2) \) is given by the formula:\( \text{Euclidean Distance} = \sqrt{(x_1 - x_2)^2 + (y_1 - y_2)^2} \)This heuristic is based on the Pythagorean theorem and provides an accurate measure of spatial distance in continuous spaces. However, it may not be suitable for grid-based environments where movement is restricted to discrete steps.

8)What do you mean by problem reduction? Explain the procedure with an example.

- Identify the Original Problem: Start with a complex problem that needs to be solved.

- Break Down the Problem: Decompose the original problem into smaller, more manageable subproblems. This step may involve identifying relevant variables, constraints, and dependencies within the problem domain.

- Apply Solution Methods: Apply known solution methods or algorithms to solve each subproblem independently. These methods may include search algorithms, optimization techniques, or problem-specific heuristics.

- Combine Solutions: Integrate the solutions obtained from solving the subproblems to derive a solution to the original problem. This step may involve aggregating, synthesizing, or reconciling the individual solutions to ensure coherence and consistency.

- Verify and Refine: Validate the solution derived from problem reduction by testing it against the original problem specifications and criteria. If necessary, refine the solution based on feedback or additional requirements.

- Determining the optimal routing for each individual delivery truck.

- Optimizing the allocation of goods to trucks to ensure efficient use of resources.

- Scheduling the departure and arrival times for each truck to avoid congestion and delays.

- Minimizing the overall transportation costs while meeting delivery deadlines and service level agreements.

9)What is game playing? Explain the reasons that games appear to be good domain in machine intelligence.

- Well-Defined Rules and Goals: Games typically have clear and well-defined rules, objectives, and win conditions, making them suitable for algorithmic analysis and decision-making. This structured environment provides a concrete framework for designing and evaluating AI algorithms.

- Complexity and Challenge: Many games, especially strategic board games like chess or Go, exhibit high levels of complexity and strategic depth that challenge human cognitive abilities. By developing AI systems capable of playing such games at a competitive level, researchers can explore advanced techniques in problem-solving, decision-making, and planning.

- Variety and Diversity: Games come in various forms, genres, and complexity levels, offering a diverse set of challenges for AI research. From classic board games to modern video games, each game presents unique characteristics and gameplay mechanics that stimulate innovation and creativity in AI algorithm development.

- Accessible Benchmarking: Games provide accessible and standardized benchmarks for evaluating and comparing the performance of AI systems. By measuring success rates, win rates, or other performance metrics in game-playing scenarios, researchers can assess the capabilities and limitations of different AI techniques in a controlled environment.

- Human Competitiveness: Games offer opportunities for AI systems to compete against human players or professional players in real-world settings. Achieving success in competitive gaming environments demonstrates the proficiency and adaptability of AI algorithms, driving advancements in machine intelligence and human-computer interaction.

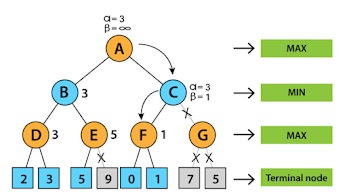

10)Show and explain alpha-beta cut Off

- Initialization: Start with initial values for alpha and beta. Alpha represents the maximum score found so far for the maximizing player, while beta represents the minimum score found so far for the minimizing player. Initially, alpha is set to negative infinity, and beta is set to positive infinity.

- Traversal: Traverse the game tree using the minimax algorithm, evaluating nodes recursively in a depth-first manner. As the algorithm progresses, update the values of alpha and beta based on the current state of the search.

- Pruning: Apply alpha-beta pruning to prune branches of the game tree that are guaranteed to be irrelevant to the final decision. At each level of the tree, if the value of a node exceeds the current alpha (for a maximizing player) or beta (for a minimizing player), stop evaluating further nodes in that subtree, as they cannot affect the final outcome.

- Cut-off: When a node is pruned due to alpha-beta conditions, it is said to be cut off from further evaluation, hence the term "alpha-beta cut-off." This pruning technique significantly reduces the number of nodes explored in the game tree, leading to faster and more efficient search algorithms.

- In a minimax search, suppose the algorithm is exploring a maximizing node (max-node) and encounters a child node with a value greater than or equal to the current alpha value. Since the maximizing player seeks to maximize the score, this child node is a promising candidate, and further exploration of its siblings is unnecessary. The algorithm prunes the remaining sibling nodes, resulting in a cut-off.

11)Explain resolution in predicate logic by locating pairs of literals that cancel out.

- Identification of Contradictory Pairs: Given a set of clauses or formulas in predicate logic, identify pairs of literals within different clauses that are complementary to each other, meaning one literal is the negation of the other. For example, if one clause contains the literal "p" and another clause contains the literal "~p" (negation of "p"), they form a contradictory pair.

- Application of Resolution: Apply the resolution rule to resolve the contradictory pairs identified in step 1. The resolution rule states that if two clauses contain complementary literals, they can be resolved by eliminating the complementary literals and combining the remaining literals to form a new clause. This new clause is called the resolvent.

- Generation of New Clauses: Repeat the resolution process iteratively, considering all possible pairs of contradictory literals in the given set of clauses. Each application of resolution generates a new clause (resolvent) that is added to the set of clauses, expanding the knowledge base.

- Termination and Conclusion: Continue applying resolution until no further resolvents can be generated or until a contradiction is reached (i.e., an empty clause is derived). If a contradiction is derived, it indicates that the original set of clauses is unsatisfiable or inconsistent. If no contradiction is reached, the resolution process terminates, and the derived clauses represent logical consequences or implications of the original knowledge.

12)What do you mean by unification? Thus explain the unification algorithm.

- Identify Variables and Constants: Given two expressions or terms, identify the variables and constants present in each expression. Variables are placeholders that can be substituted with other terms, while constants are immutable values.

- Initialize Substitution: Start with an empty substitution, representing the absence of any variable assignments.

- Iterate Through Terms: Traverse the terms of both expressions simultaneously, comparing them recursively. At each step, consider the current pair of terms and perform the following checks:

- If both terms are identical constants, continue to the next pair.

- If one term is a variable and the other is a constant or another variable, update the substitution by assigning the variable to the constant or the other variable.

- If both terms are variables, unify them by assigning one variable to the other or vice versa. Update the substitution accordingly.

- If both terms are complex structures (predicates or functions), recursively unify their subterms.

- If a mismatch is encountered (e.g., a constant and a variable with different values), return failure indicating that unification is not possible.

- Return Substitution: If unification is successful (i.e., all pairs of terms can be made equal through substitutions), return the resulting substitution. This substitution provides a mapping of variables to terms that makes the two expressions identical or compatible.

13)Convert the following sentences into wff and then write procedure for conversion to clause form. “All Romans who know Marcus either hate Caesar or think that anyone who hates anyone is crazy.”

Sentence:

All Romans who know Marcus either hate Caesar or think that anyone who hates anyone is crazy.

Well-Formed Formula (WFF):

∀x (R(x) ∧ K(x, Marcus) → H(x, Caesar) ∨ ∀y (H(x, y) → C(y)))

Conversion Procedure:

- Move quantifiers to the leftmost position.

- Eliminate implication and replace it with its equivalent form using disjunction and negation.

- Push negations inward using De Morgan's laws.

- Distribute disjunction over conjunction.

- Skolemize existential quantifiers (if any).

- Rename variables to ensure no variable occurs twice in a clause.

- Convert the resulting formula into a set of clauses.

Clause Form:

14)Explain the two sentences are logically equivalent hence describe law of equivalence.

Two sentences are considered logically equivalent if they have the same truth values under all possible interpretations or truth assignments. In other words, they express the same logical content, and any interpretation that satisfies one sentence also satisfies the other.

The law of equivalence, also known as the law of logical equivalence or the principle of substitution, states that two logical expressions are equivalent if and only if they have the same truth values for all possible truth assignments to their variables.

This law is fundamental in logic and forms the basis for various reasoning techniques and proof methods. It allows us to substitute equivalent expressions for one another in logical deductions, transformations, and simplifications without changing the truth value of the overall statement.

For example, consider the logical equivalence between the expressions \( p \land (q \lor r) \) and \( (p \land q) \lor (p \land r) \):

- Truth Table for \( p \land (q \lor r) \):

p q r q ∨ r p ∧ (q ∨ r) T T T T T T T F T T T F T T T T F F F F F T T T F F T F T F F F T T F F F F F F - Truth Table for \( (p \land q) \lor (p \land r) \):

p q r p ∧ q p ∧ r (p ∧ q) ∨ (p ∧ r) T T T T T T T T F T F T T F T F T T T F F F F F F T T F F F F T F F F F F F T F F F F F F F F F

As shown in the truth tables above, the expressions \( p \land (q \lor r) \) and \( (p \land q) \lor (p \land r) \) have the same truth values for all possible truth assignments to their variables. Therefore, they are logically equivalent, and the law of equivalence holds.

15)What are the methods to illustrate structural representation of knowledge.

Importance:

Structural representation of knowledge is essential for several reasons:

- Organization: It helps organize vast amounts of information into a coherent and manageable structure, making it easier to navigate and comprehend.

- Understanding: By depicting the relationships between concepts or entities, structural representations enhance understanding and insight into complex topics.

- Retrieval: Structured knowledge facilitates efficient retrieval of information by providing clear pathways and categorizations.

- Reasoning: It enables logical reasoning and inference by explicitly capturing the dependencies and constraints within a knowledge domain.

- Communication: Structural representations serve as effective communication tools, enabling knowledge sharing and collaboration among individuals and systems.

Methods of Structural Representation:

Various methods are used to represent knowledge structurally, each with its own strengths and applicability:

- Concept Maps: Graphical representations that depict relationships between concepts using nodes and edges.

- Ontologies: Formal models that represent knowledge using a hierarchical network of concepts and their interrelationships.

- Entity-Relationship Diagrams (ERDs): Graphical representations used in database design to illustrate the structure of a database schema.

- Knowledge Graphs: Graph-based data structures that represent knowledge as nodes and edges in a directed graph.

- Tree Structures: Hierarchical representations that organize information into nested categories or levels of abstraction.

Conclusion:

In conclusion, structural representation of knowledge plays a crucial role in organizing, understanding, and leveraging information effectively. By employing suitable methods of representation, individuals and organizations can harness the power of structured knowledge to make informed decisions, solve complex problems, and advance knowledge discovery in various domains.

1. Concept Maps:

Concept maps are graphical tools used to represent knowledge in a structured format. They consist of nodes representing concepts or ideas, connected by labeled edges that signify the relationships between them. Concept maps are widely used in education, brainstorming sessions, and knowledge management to visualize the hierarchical structure of knowledge and facilitate understanding of complex topics. They are especially useful for illustrating the connections between different concepts and identifying key relationships within a knowledge domain.

2. Ontologies:

Ontologies are formal models that represent knowledge in a structured and semantically rich manner. They typically consist of a hierarchical network of concepts and their interrelationships, defined using formal logic. Ontologies serve as explicit specifications of a domain's vocabulary and the relationships between different entities, enabling interoperability, data integration, and reasoning across diverse knowledge sources. They are widely used in fields such as artificial intelligence, information retrieval, and the semantic web to facilitate knowledge sharing and reuse.

3. Entity-Relationship Diagrams (ERDs):

Entity-Relationship Diagrams (ERDs) are graphical representations used in database design to illustrate the structure of a database schema. They depict entities (such as objects, people, or events) as nodes and their relationships as labeled edges. ERDs help stakeholders visualize the data model and understand how different entities are related to each other. They are valuable tools for database administrators, software developers, and business analysts in designing and analyzing relational databases, ensuring data integrity, and optimizing query performance.

4. Knowledge Graphs:

Knowledge graphs are graph-based data structures that represent knowledge in the form of nodes (entities) and edges (relationships) in a directed graph. Knowledge graphs capture the semantic connections between entities and enable rich, interconnected representations of structured and unstructured data. They are used in various applications, including search engines, recommendation systems, and natural language processing, to enhance information retrieval and knowledge discovery. Knowledge graphs enable users to explore complex datasets, uncover hidden relationships, and derive actionable insights from data.

5. Tree Structures:

Tree structures represent hierarchical relationships among concepts or entities in a tree-like format. They consist of a single root node and branching nodes representing subcategories or components. Tree structures are commonly used in file systems, organizational charts, and taxonomies to organize and classify information in a hierarchical manner. They facilitate efficient navigation and retrieval of information by organizing it into nested categories or levels of abstraction. Tree structures provide a clear and intuitive way to represent hierarchical relationships and visualize the structure of complex systems.

These methods for structural representation of knowledge provide powerful tools for organizing, visualizing, and reasoning about complex information domains. By choosing the appropriate method based on the specific requirements of the application, stakeholders can effectively manage knowledge, make informed decisions, and solve problems in diverse fields.

16)What do you mean by frames? How are knowledge represented in frames?

Key Concepts:

Frames consist of the following key components:

- Slots: Slots represent properties or attributes associated with a frame. They define the structure of the frame and can hold values such as literals, pointers to other frames, or procedures.

- Values: Values are the data stored in slots. They can be primitive data types (e.g., strings, numbers) or references to other frames.

- Hierarchy: Frames can be organized into a hierarchical structure, with more specific frames inheriting properties from more general frames. This allows for efficient representation of common attributes shared by related concepts.

- Prototypes: Frames can serve as prototypes or templates for creating new instances. When a new frame is created, it inherits the structure and properties of its prototype.

Representation of Knowledge:

Knowledge is represented in frames by defining frames to represent different concepts or entities and filling in the slots with relevant information. For example, to represent a "Person" frame, we might define slots such as "Name", "Age", "Gender", etc., and populate them with specific values for individual persons.

Frames allow for the representation of complex, structured knowledge in a natural and intuitive way. They enable the encapsulation of domain-specific knowledge, support inheritance and abstraction, and facilitate reasoning and problem-solving tasks in artificial intelligence systems.

17)Explain the process of finding right structure of knowledge representation.

1. Domain Analysis:

Begin by analyzing the domain or problem space to understand its key concepts, entities, relationships, and constraints. Identify the relevant attributes and properties of the entities and the types of relationships between them. This analysis helps in defining the scope and requirements of the knowledge representation.

2. Identify Representation Requirements:

Based on the domain analysis, determine the specific requirements and objectives of the knowledge representation. Consider factors such as the complexity of the domain, the types of reasoning tasks to be performed, the scalability and efficiency requirements, and the compatibility with existing systems or standards.

3. Evaluate Representation Schemes:

Explore different knowledge representation schemes and techniques that are suitable for the identified requirements. This may include considering methods such as frames, semantic networks, ontologies, logic-based representations, and probabilistic models. Evaluate the strengths, weaknesses, and trade-offs of each representation scheme in the context of the domain and requirements.

4. Prototyping and Iterative Design:

Create prototypes or mockups of the knowledge representation using selected schemes or combinations of schemes. Test the prototypes with sample data and use cases to assess their effectiveness in capturing and representing the relevant knowledge. Iterate on the design based on feedback and insights gained from testing.

5. Consider Knowledge Acquisition and Maintenance:

Take into account the processes of knowledge acquisition and maintenance when designing the representation structure. Consider how new knowledge will be acquired and integrated into the representation, as well as how existing knowledge will be updated or revised over time. Ensure that the representation structure supports these processes efficiently and effectively.

6. Validation and Refinement:

Validate the chosen representation structure through rigorous testing, validation, and verification processes. Use benchmark datasets, expert reviews, and empirical evaluations to assess the performance, accuracy, and reliability of the representation in various scenarios. Refine the structure as needed based on the results of validation and feedback from stakeholders.

By following these steps, practitioners can systematically identify, design, and refine the right structure of knowledge representation that best meets the needs and objectives of the artificial intelligence system.

18)How are knowledge representation illustrated in semantic nets?

Illustration of Knowledge Representation:

Knowledge representation in semantic nets involves the following elements:

- Nodes: Nodes represent concepts, entities, or objects in the knowledge domain. Each node typically corresponds to a specific concept or entity, such as a person, place, or event. Nodes can also represent attributes or properties associated with the concepts.

- Edges: Edges represent relationships or connections between nodes. They indicate how different concepts or entities are related to each other in the knowledge domain. Edges are labeled to specify the type of relationship between nodes, such as "is-a", "part-of", "has-property", etc.

- Hierarchical Structure: Semantic nets often have a hierarchical structure, with nodes organized into levels or layers based on their specificity or generality. This hierarchy allows for the representation of concepts at different levels of abstraction and facilitates navigation and reasoning in the knowledge domain.

- Directed Graph: Semantic nets are typically represented as directed graphs, where edges have a direction indicating the flow of information or the directionality of relationships between nodes. This directional information is important for capturing asymmetric relationships and dependencies in the knowledge domain.

Example:

As an example, consider a semantic net representing knowledge about animals:

- Nodes could represent various animal species (e.g., "cat", "dog", "bird") and their attributes (e.g., "has-fur", "has-wings").

- Edges could represent relationships such as "is-a" (e.g., "cat" is-a "mammal") or "eats" (e.g., "cat" eats "mouse").

- The hierarchical structure of the semantic net would organize animals into categories such as mammals, birds, reptiles, etc., with specific species as subtypes of these categories.

Semantic nets provide a visual and intuitive way to represent and reason about knowledge in artificial intelligence systems. They enable the encoding of domain-specific knowledge in a structured format, facilitating tasks such as information retrieval, inference, and decision-making.

19)What do you mean by understanding? What makes understanding hard?

Key Aspects of Understanding:

Understanding typically involves the following key aspects:

- Context: Understanding requires considering the context in which information is presented and interpreting it in relation to relevant background knowledge or prior experiences.

- Inference: Understanding often involves making inferences or drawing conclusions based on available information, reasoning processes, and logical rules.

- Abstraction: Understanding may involve abstracting key concepts or generalizing from specific instances to form higher-level conceptual representations.

- Integration: Understanding requires integrating diverse sources of information, recognizing patterns and relationships, and synthesizing them into a coherent mental model.

- Application: Understanding enables applying knowledge to solve new problems, make predictions, generate explanations, and adapt to novel situations.

Challenges in Achieving Understanding:

Achieving understanding is challenging in AI due to several factors:

- Ambiguity: Natural language and real-world data often contain ambiguity, vagueness, and context-dependent meanings, making it difficult to accurately interpret and understand them.

- Complexity: Many real-world phenomena are inherently complex, involving multiple interacting factors, nonlinear relationships, and emergent properties. Understanding such complexity requires advanced modeling and reasoning capabilities.

- Uncertainty: Real-world data is often uncertain, incomplete, or noisy, leading to uncertainty in the conclusions drawn from them. Dealing with uncertainty and making reliable decisions in the face of uncertainty is a major challenge for AI systems.

- Background Knowledge: Understanding often relies on extensive background knowledge and domain expertise, which may be difficult to acquire or represent explicitly in AI systems.

- Context Sensitivity: Understanding requires considering the context in which information is presented and interpreting it appropriately. Contextual understanding poses challenges for AI systems, particularly in understanding nuanced or culturally-specific meanings.

Despite these challenges, advances in AI techniques such as natural language processing, machine learning, knowledge representation, and reasoning have led to significant progress in achieving deeper levels of understanding in AI systems. However, achieving human-like understanding remains an ongoing research goal in the field of artificial intelligence.

20)Explain the structure of scripts and schemas in understanding.

Scripts:

Scripts represent knowledge about stereotypical sequences of events or actions that typically occur in familiar situations. They define the expected sequence of actions, participants, and outcomes associated with specific types of events or activities. The structure of scripts typically includes the following components:

- Initiation: The starting point of the script, which specifies the conditions or triggers that initiate the event or activity.

- Actions: The sequence of actions or steps involved in carrying out the event or activity, often organized into a chronological order.

- Participants: The individuals, objects, or entities involved in the event or activity, along with their roles and relationships.

- Goals: The desired outcomes or objectives of the event or activity, which guide the behavior of the participants.

- Resolution: The final state or outcome of the event or activity, including any consequences or follow-up actions.

Schemas:

Schemas represent broader, more abstract knowledge structures that capture common patterns, themes, or concepts in the world. They serve as mental frameworks for organizing and interpreting information, guiding perception, memory, and decision-making. The structure of schemas is more flexible and adaptive than scripts and may include:

- Concepts: Abstract representations of categories, classes, or types of objects, events, or phenomena.

- Attributes: Characteristics or properties associated with concepts, defining their features, qualities, or characteristics.

- Relations: Relationships and connections between concepts, indicating how they are related or interact with each other.

- Prototypes: Idealized or typical examples of concepts that serve as reference points for categorization and comparison.

- Scripts: Schemas may incorporate scripts as specialized knowledge structures for specific types of events or activities.

Scripts and schemas provide powerful mechanisms for representing and organizing knowledge in the human mind and AI systems. They enable efficient processing of information, facilitate comprehension and prediction, and support adaptive behavior in diverse contexts.

21)How do you understand the single and multiple sentences in natural language understanding.

Understanding Single Sentences:

When processing a single sentence, the following steps are typically involved in natural language understanding:

- Tokenization: The sentence is segmented into individual words or tokens.

- Part-of-Speech Tagging: Each token is assigned a part-of-speech tag (e.g., noun, verb, adjective) to indicate its grammatical role in the sentence.

- Dependency Parsing: The syntactic structure of the sentence is analyzed to identify relationships between words and phrases, such as subject-verb-object dependencies.

- Semantic Analysis: The meaning of the sentence is inferred based on the syntactic structure, lexical semantics, and contextual information. This may involve tasks such as word sense disambiguation, semantic role labeling, and entity recognition.

- Pragmatic Analysis: The intended meaning and implications of the sentence are interpreted in light of pragmatic factors such as speaker intentions, conversational context, and common knowledge.

Understanding Multiple Sentences:

Understanding multiple sentences or longer texts involves additional challenges and techniques:

- Coreference Resolution: Resolving references to entities mentioned across multiple sentences (e.g., pronouns, definite descriptions) to determine their referents.

- Anaphora Resolution: Identifying and resolving anaphoric references within and across sentences, such as repeated mentions of the same entity.

- Discourse Analysis: Analyzing the structure and coherence of the discourse or text, including relationships between sentences, discourse markers, and rhetorical structures.

- Contextual Understanding: Incorporating contextual information from preceding and subsequent sentences to disambiguate meanings, resolve ambiguities, and infer implicit information.

- Textual Inference: Making logical inferences and drawing conclusions from the content of multiple sentences, including tasks such as textual entailment and paraphrase detection.

By applying these techniques and leveraging linguistic knowledge, machine learning algorithms, and semantic representations, natural language understanding systems can effectively process both single and multiple sentences in diverse contexts and domains.

22)Explain the role of keyword matching in understanding also explain semantic analysis.

Role of Keyword Matching:

Keyword matching involves identifying specific words or phrases in a text that match predefined keywords or patterns. It is often used as a simple and efficient method for extracting relevant information from textual data. The role of keyword matching in understanding includes:

- Information Retrieval: Keyword matching is used to retrieve documents or passages containing specific keywords or terms, enabling users to find relevant information quickly.

- Topic Detection: By identifying keywords related to specific topics or themes, keyword matching helps in detecting the subject matter of a text and categorizing it accordingly.

- Entity Recognition: Keyword matching can be used to recognize named entities such as people, organizations, locations, or products mentioned in a text, based on predefined lists of entity names.

- Query Expansion: In information retrieval systems, keyword matching is used to expand user queries by identifying synonyms, related terms, or alternative spellings of the keywords.

- Rule-Based Processing: Keyword matching is often employed in rule-based systems for extracting structured information or triggering predefined actions based on the presence of specific keywords or patterns in the text.

Semantic Analysis:

Semantic analysis, also known as semantic processing or semantic interpretation, involves extracting meaning from textual data by analyzing its underlying semantics, context, and relationships. It aims to understand the intended meaning of the text beyond its literal interpretation. The role of semantic analysis in understanding includes:

- Meaning Extraction: Semantic analysis identifies the meaning of words, phrases, and sentences by considering their context, syntactic structure, and semantic relationships.

- Semantic Role Labeling: Semantic analysis identifies the roles played by different entities and concepts in a sentence, such as agents, patients, locations, or instruments, to understand their relationships and contributions to the overall meaning.

- Semantic Parsing: Semantic analysis parses sentences into formal representations such as semantic graphs, logical forms, or semantic frames, capturing the semantics and relationships between different elements of the sentence.

- Disambiguation: Semantic analysis resolves lexical and syntactic ambiguities in the text by considering contextual cues, domain knowledge, and semantic constraints, ensuring accurate interpretation of the intended meaning.

- Inference: Semantic analysis involves making logical inferences and drawing conclusions from the content of the text, such as implicatures, entailments, presuppositions, or conversational implicatures.

By combining keyword matching with semantic analysis, NLU systems can effectively process and understand textual data, enabling a wide range of applications such as information retrieval, question answering, sentiment analysis, and machine translation.

Social Plugin